System Design Web Crawler

System design web crawler. In a System design question understand the scope of the problem and stay true to the original problem. It works to compile information on niche subjects from various resources into one single platform. One of the most famous distributed web crawler is Googles web crawler which indexes all.

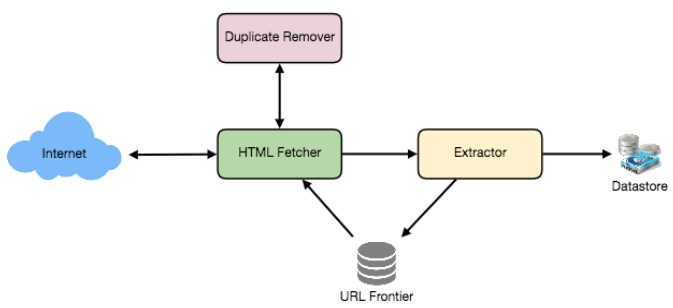

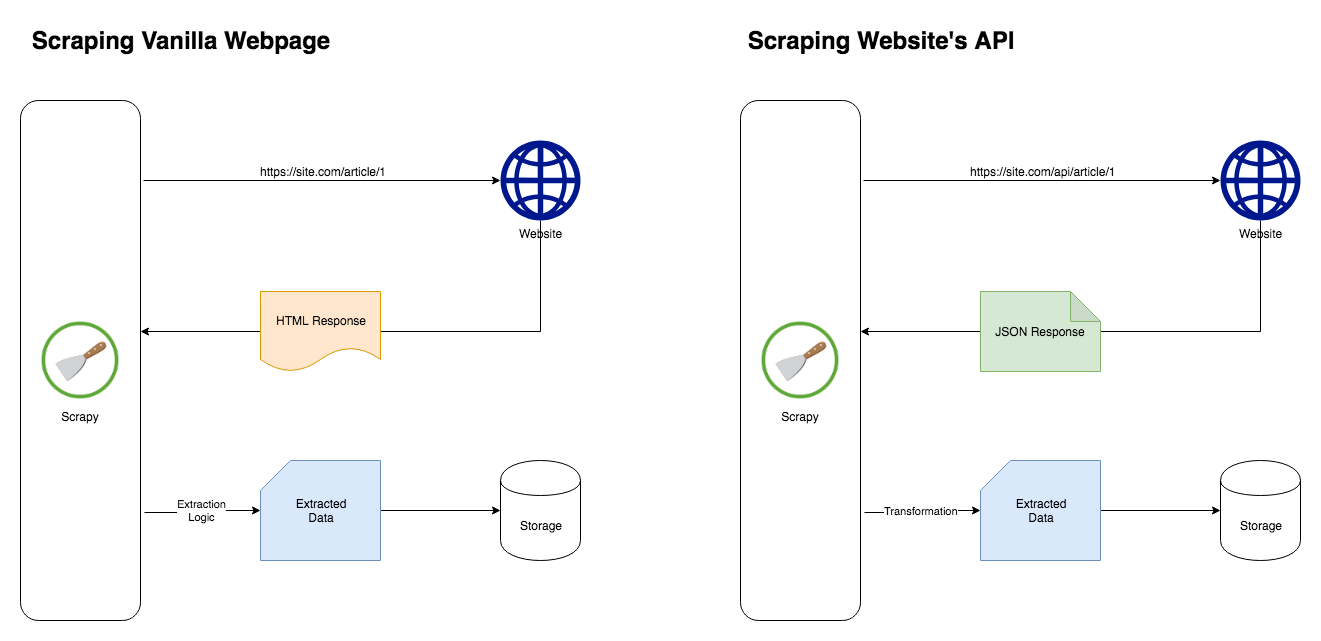

System Designers Related Companies. A web crawler also known as a robot or a spider is a system for the bulk downloading of web pages. A scalable service is required that can crawl the entire web and can collect hundreds of millions of web documents.

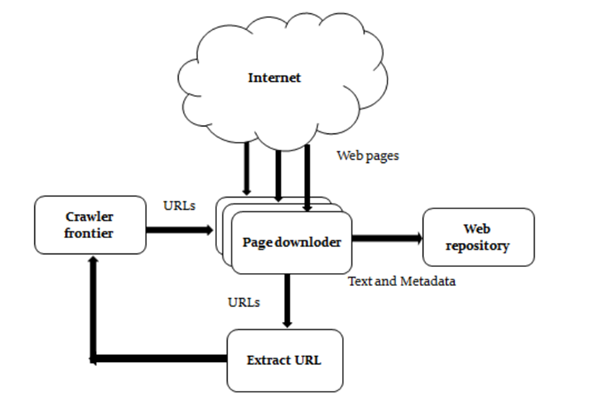

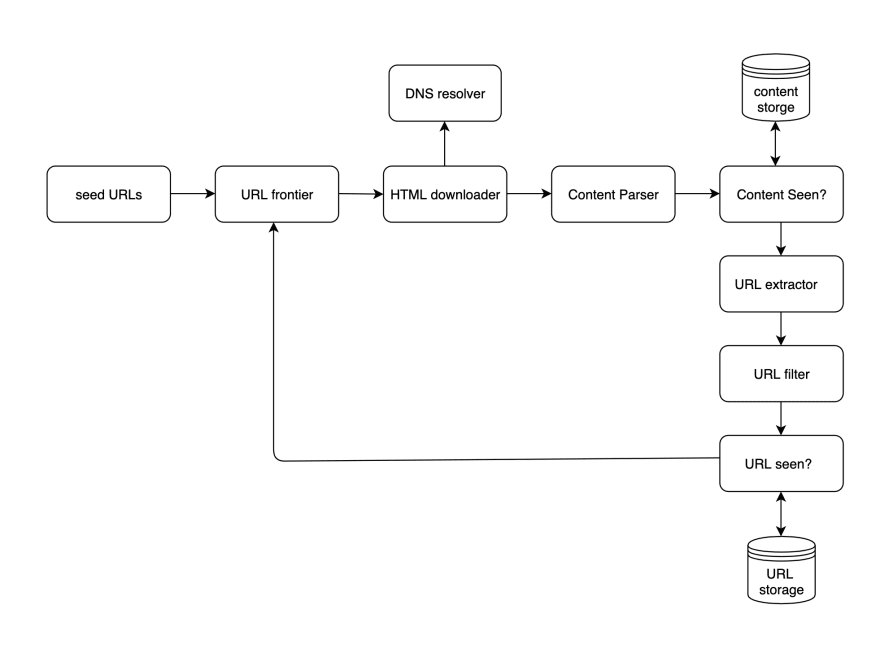

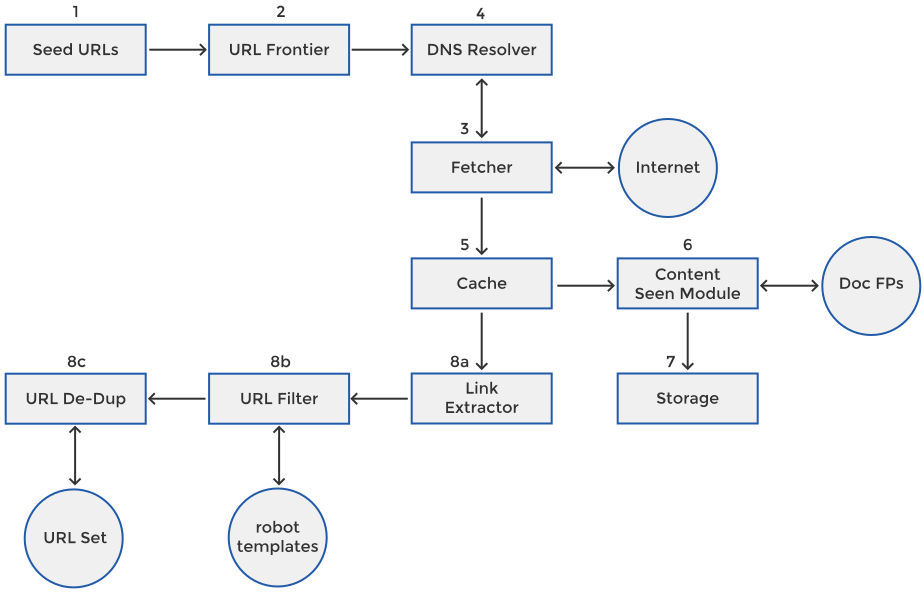

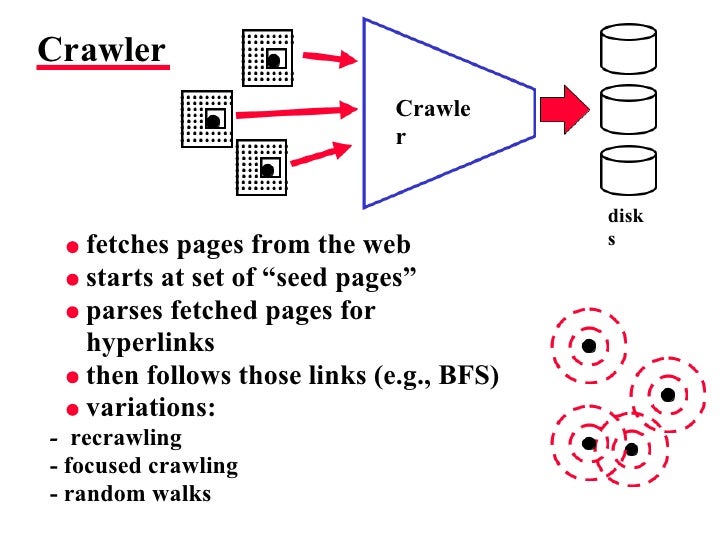

It collects documents by recursively fetching links from a set of starting pages. Let us move to the next System Design Interview Questions. Besides the search engine you can build a web crawler to help you achieve.

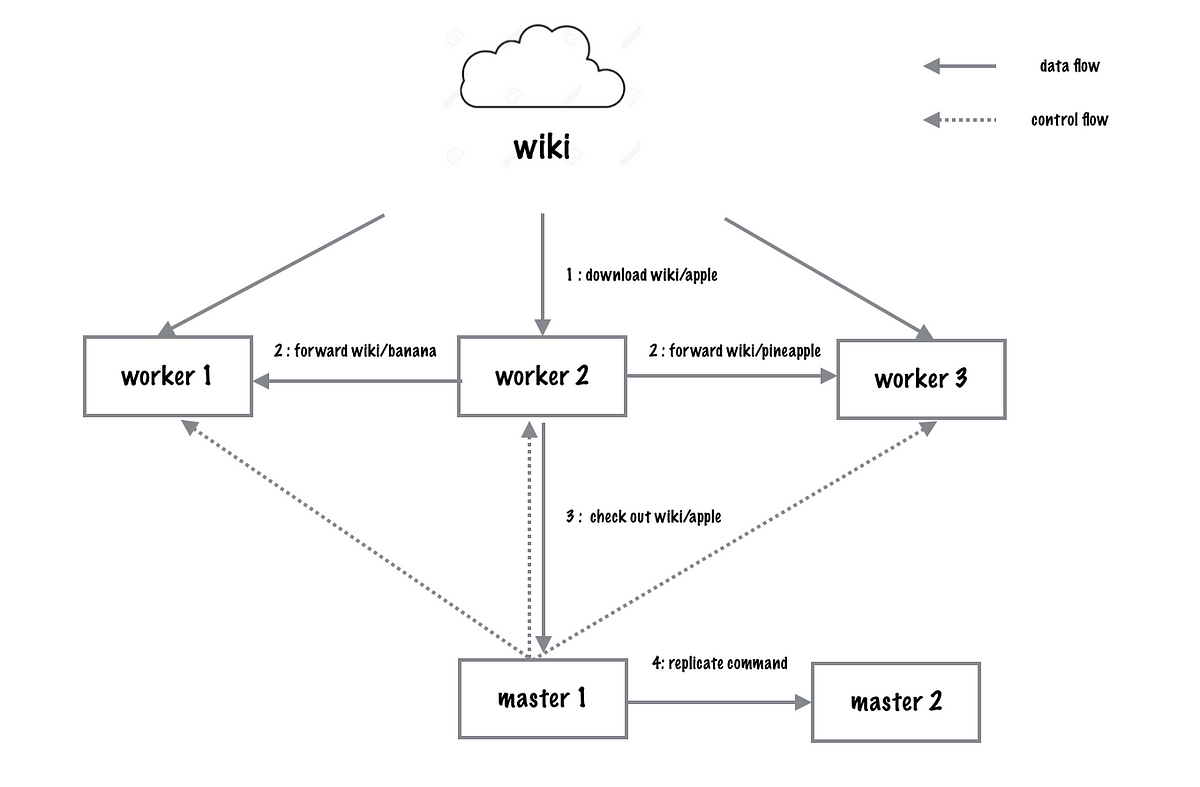

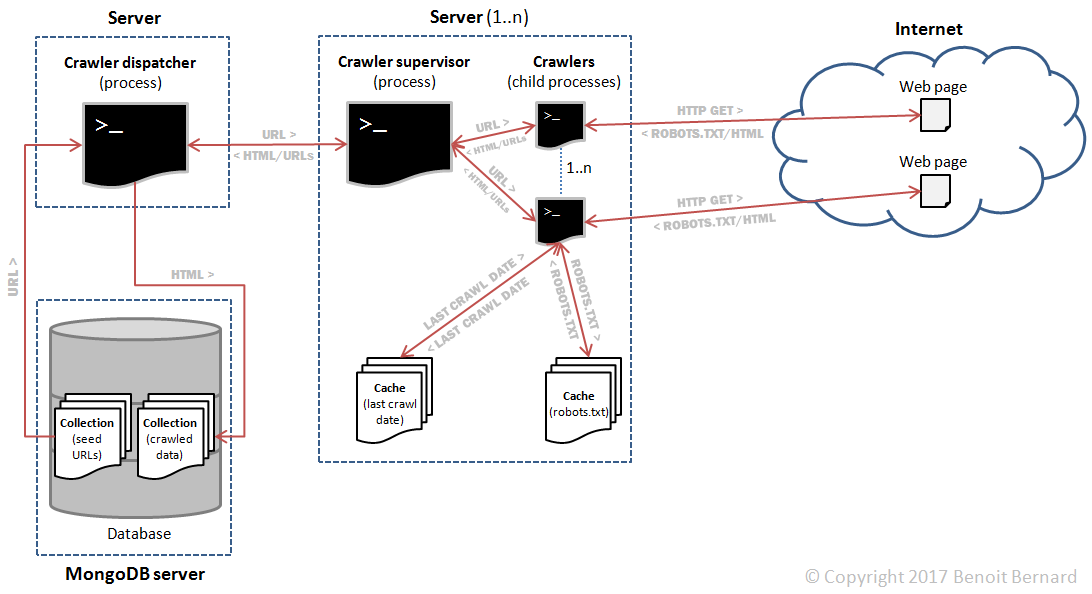

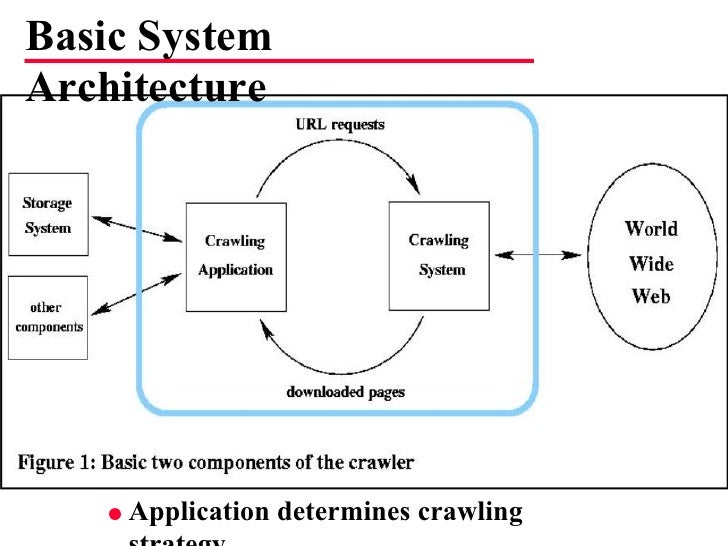

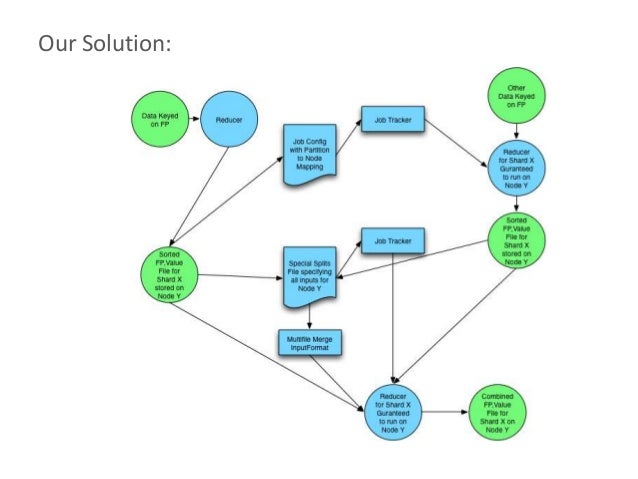

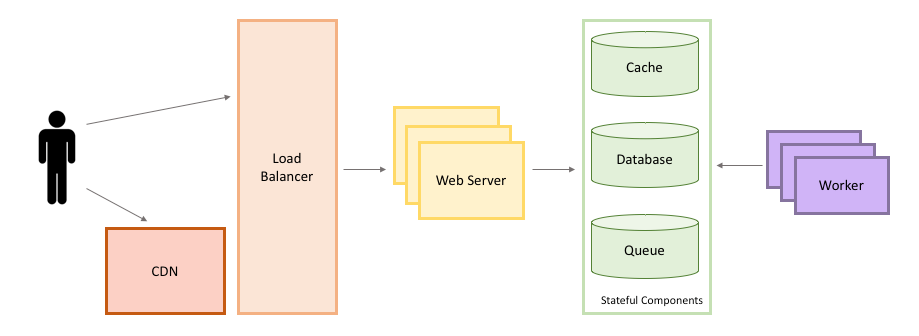

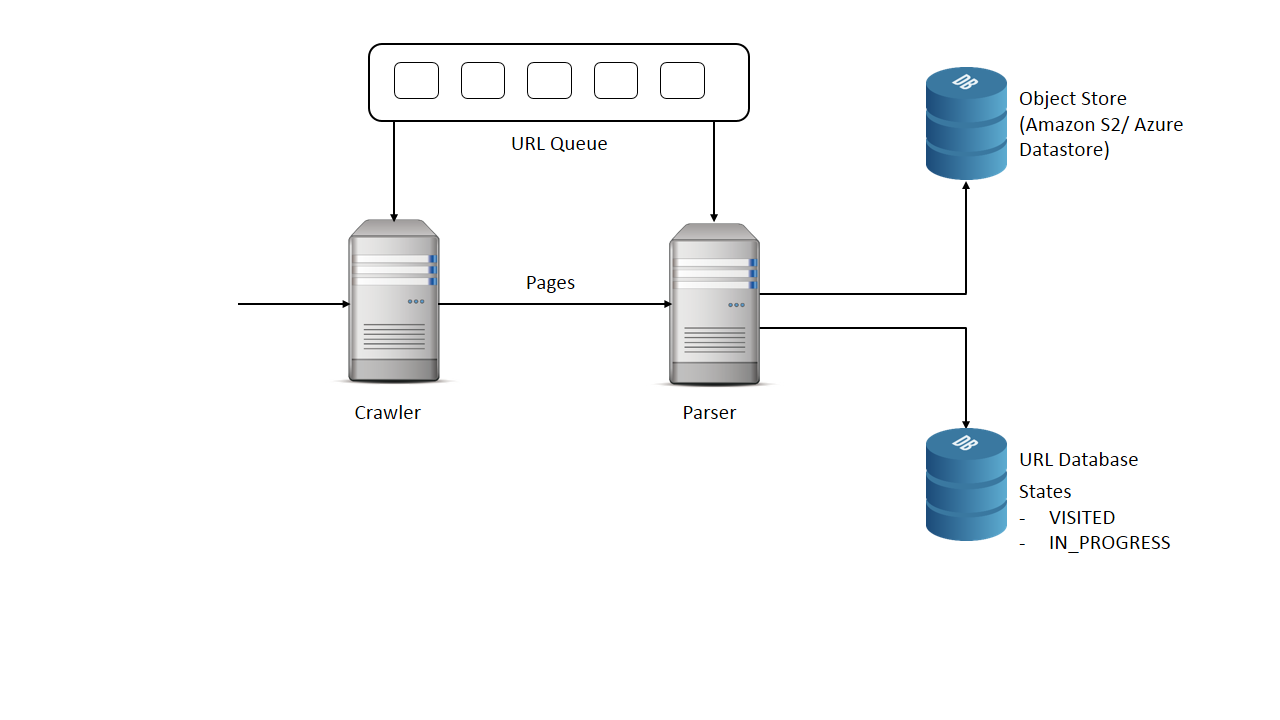

Our system consists of four major components shown in Figure 1. Design a web crawler. A distributed web crawler typically employs several machines to perform crawling.

In this video we introduce how to solve the Design Web Crawler system design question which is used by big tech companies in system design interviews. A web crawler spider or search engine bot downloads and indexes content from all over the Internet. Pages with duplicate content should be ignored.

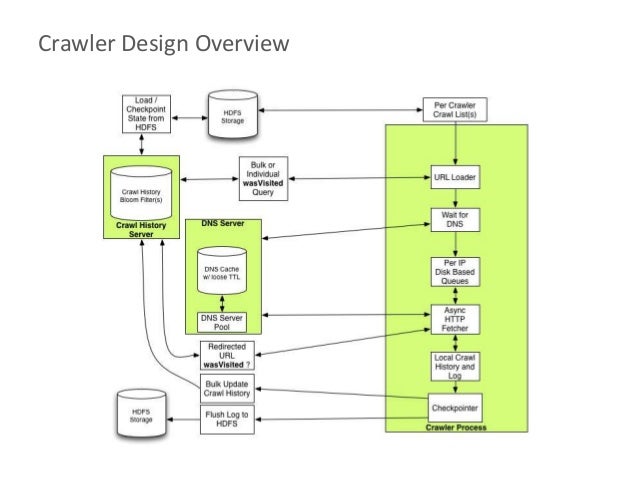

The scope was to design a web crawler using available distributed system constructs and NOT to design a distributed database or a distributed cache. Google Search is a unique web crawler that indexes the websites and finds the page for us. On our research design we implemented a focused crawler for Dark Web forums.

Example- a symbolic link within a file system can create a cycle. Join our FB group.

Lets design a Web Crawler that will systematically browse and download the World Wide Web.

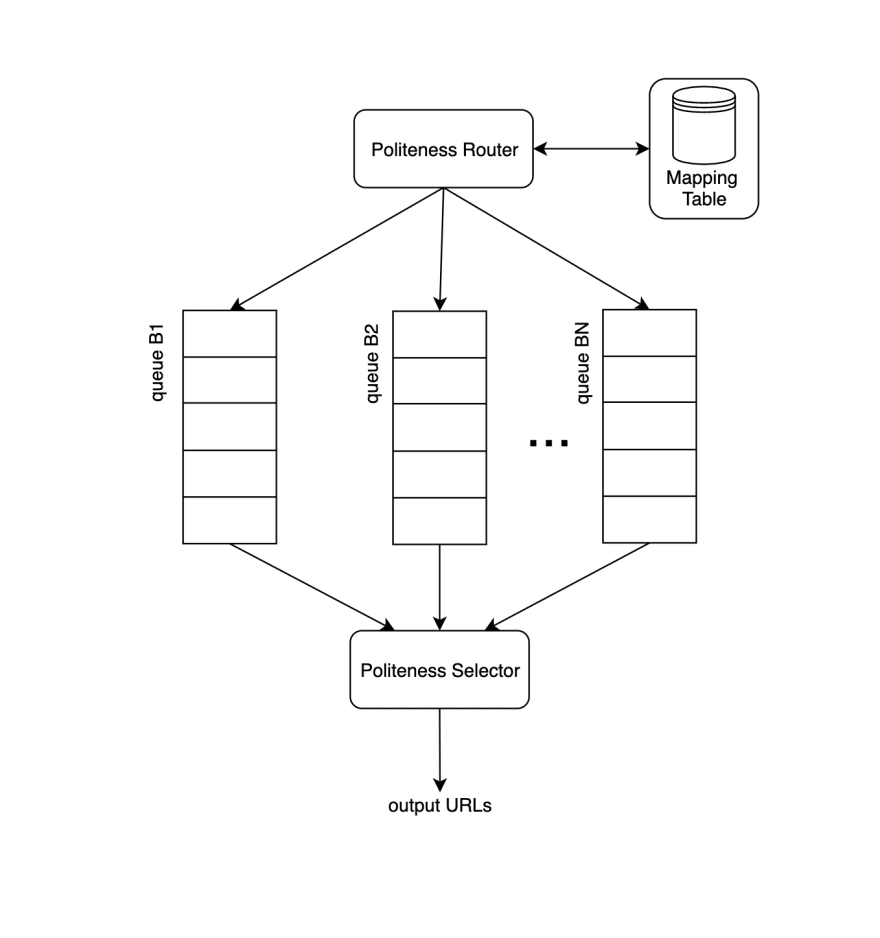

Many sites particularly search engines use web crawling as a means of providing up-to-date data. The web crawler is a computer program which used to collectcrawling following key valuesHREF links Image links Meta Data etc from given website URL. 8 Design a Web Crawler. We should also consider the newly added or edited web pages. When a new node is added to the system a small fraction of links crawled or not that were or will be crawled in an old node will get migrated to the new node. The scope was to design a web crawler using available distributed system constructs and NOT to design a distributed database or a distributed cache. Example- a symbolic link within a file system can create a cycle. A distributed web crawler typically employs several machines to perform crawling. When a node becomes offline the links assigned to this node will be shared across other nodes.

In a System design question understand the scope of the problem and stay true to the original problem. It is designed like intelligent to follow different HREF links which are already fetched from the previous URL so in this way Crawler can jump from one website to other websites. Let us move to the next System Design Interview Questions. When a new node is added to the system a small fraction of links crawled or not that were or will be crawled in an old node will get migrated to the new node. The Crawler Write path The Indexer Read path. Designing a Web Crawler. Web Crawler System Design Interview Question Use case.

Posting Komentar untuk "System Design Web Crawler"